LaunchReady UI was my first attempt to build a sellable product on the side. It was a great experience that included everything from market research, company formation, and refining a sales pitch through some interesting technical and usability challenges.

I worked on this project on nights and weekends from April 2017 through November, coming in at around 300 hours and around $1,400 to build a production ready product, advertising, documentation and initial content, hosting and delivery automation on 4 platforms, and associated legal activities for the formation and service agreement.

Blog Posts

I wrote a small series of blog posts specifically about LaunchReady UI:

- 2018: Meet LaunchReady UI: A SaaS Product That Almost Was

- 2018: LaunchReady UI: Focus on the Customer

- 2018: LaunchReady UI: Don’t Get Distracted, Getting Stuff Done

And a number of background tech posts:

- 2017: Creating a Static-Generated Marketing Site

- 2017: Mapping Complex Types to/from the DB with PetaPoco

- 2017: SPA Routing in ASP.Net Core

- 2017: Mapping Complex types to/from JSON with JSON.Net

- 2017: Multiple NuGet Methods for VS2017 + MSBuild 15 in TeamCity

- 2017: Creating a local Service Fabric Cluster

- 2017: Custom Authentication in ASP.Net Core (without Identity)

Technologies

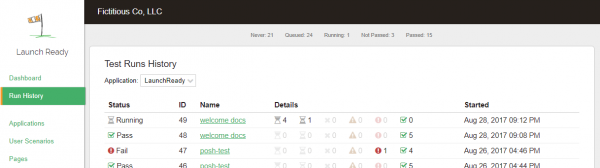

LaunchReady UI was hosted in Azure and composed of a front-end API, back-end test agents, and the marketing site.

Front-end + API

The front-end and API was hosted on Azure App Services, with Application Insights wired in for traffic analysis, monitoring, and visibility.

The backend uses ASP.Net Core on .Net 4.6 and uses a combination of Azure SQL Database and Azure Blob Storage for persistence. The AsyncPoco library was used for data persistence logic, custom authentication code supported interactive users, public API calls w/ rotateable keys, and internal service-to-service calls with HMAC signatures. Autofac was included for IoC.

The frontend uses KnockoutJS for view binding, RequireJS for modules, Zousan for promises, D3.js for charting, Moment.js for dates, LESS, and a few other odds and ends. The build process uses gulp and eslint.

Only a small number of unit tests were used on the back-end, mostly focused on ensuring endpoints had defined security. Error handling send every unhandled exception through email to a dedicated email account.

Test Agents

The test agents ran in Service Fabric clusters, polling the API above to ask for work and execute tests.

These were written in C# on .Net 4.6, using Selenium to execute tests written in a custom grammar and following the PageObject pattern. Initially tests ran against PhantomJS 2, but I was testing Headless Chrome locally for rollout.

Error handling was reported via the API, there were no incoming ports, and all intra-service communications relied on HMAC headers and shared keys. Diagnostics data was shipped to Librato.

Marketing Site

The marketing site was static generated via the metalsmith nodejs package, covered in this post: Creating a Static-Generated Marketing Site

Sample Scripts

There were sample API clients written in Powershell and nodejs, used 3 times/day to run automated test runs against a nodejs sample site.

Example posts walked through deployment of a small node.js application to Azure App Service and Heroku, using build services such as TeamCity, CircleCI, and TravisCI.

Continuous Delivery Pipeline

The delivery pipeline for the API and Agents was managed by TeamCity and included deployment to a local set of VMs for testing, and automated delivery to Service Fabric and Azure App Service, including automatically applying changes to the database. A full production push took less than 15 minutes from pushing to git to reaching production and rolling out updated agents.

)

)