I am upgrading a small, brownfield application from 2014 to 2025 using an AI Agent (Cursor). My goal is two-fold: (1) finally do the upgrade work for this live application I've been putting off, (2) continue to develop tactics and practice on using AI Agents with brownfield applications.

The goal of this post is to share some of the findings that stood out along the way, both to help others and to crystallize the info more for myself.

Summary of notable moments below:

- 👎 "Use the AI Agent to help plan out the work": Did not work so well for me in this instance

- 👍 For iterations of straightforward work, have the AI summarize what it finished and create the task for the next agent

- 👍 Agents are good at iterating on build + test failures (but sometimes need reminders to actually run the build/tests)

- 👍 When there's no patterns to follow, the AI is at it's weakest/wildest

- 👍 When it goes too far off the rails, throw the output away and start again

- 👍 Always bake "YAGNI", "Keep changes minimal", etc. into prompts or rules files

All work in this post uses Cursor with Sonnet 3.7 and/or Google 2.5 Pro 03-25.

The App

I built sqlishard.com in 2013 and 2014 to provide an answer to "where should I send folks to learn T-SQL". It is a .Net 4.5.2 ("Framework") ASP.Net site using a combination of MVC and WebAPI to serve a knockoutjs SPA to enable folks to walk through SQL exercises and evaluate queries they enter while solving each exercise.

ASP.Net has gone through 6-7 major versions since then.

ASP.Net Auth continued to earn its place in the top "let's rethink how this all works again" list (React Router is #1, if you're curious).

Most of the architecture and decisions are a little questionable, half of it was experimental things that "worked" and were never used again.

SQLisHard may be a small brownfield app, but it is mighty where it counts: technical debt.

Which makes it perfect to continue exploring tactics for working with AI agents on brownfield apps.

Findings

These are the notable findings from the first stage of the modernization: Upgrade the .Net projects, unit tests, and UI tests to .Net 9.

Creating the overall plan to follow: 🔴

Many AI Agents have added the idea of a "Plan" or "Architect" mode to their tooling over the past few months. One common suggestions is to prompt the AI Agent to plan the work, starting from a broad outline and then presumably asking you questions until it is well planned. So far I have had 0 cases where the AI did a good job with this across several types of projects and scopes.

How it went: 🔴 The initial plan was about 50% off base, including steps I specifically called out as out of scope. I gave it some feedback to focus the scope on 4 of the 8 stages it outlined, then as it was evaluating files during the discussion it went into an endless loop on one particular file (137 reads before I caught it).

Part of the way into revising the plan, it switched to actually starting the work, so I rolled with it.

Initial Prompt:

We are modernizing this ASP.Net project from .Net Framework 4 to .Net 9, as well as other updates to the front-end, db migrations, and automation. This application is an interactive web-based tool that presents users with a number of database exercises that they try to solve, with the system evaluating their inputs against a readonly database and an exercise definition to determine if they have successfully completed each one.

The first task is to upgrade the projects and application from .Net Framework to .Net 9: @https://github.com/tarwn/SQLisHard/issues/1

The projects are organized like so:

- SQLisHard: the web project, using outdated ASP.Net MVC4 and WebAPI

- SQLisHard.Domain: the business logic for managing exercises, evaluating user inputs, and managing the query engine

- SQLisHard.Core: holds Petapoco for data access, a few models, and the membership implementation

- SQLisHard.General: cross-cutting elements, like logging, some of which are no longer in use

There are tests and integration tests for most of these libraries.

This task will not upgrade the frontend libraries (HTML, javascript, knockout, CSS) unless it's necessary to make basic functionality work with the changes to the backend.

Before making changes, create a plan on how to upgrade the .Net projects and dependencies. This project should successfully run in .Net 9 when complete, and all settings that are currently embedded in csproj files and parameters will need to be updated to more modern methods of managing settings.

In retrospect, I should have git reset and tried better variants of this prompt. Later work in other projects has seen better success with this approach.

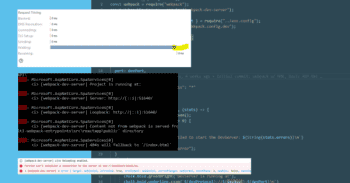

Performing the first Upgrade: 🟢

On a positive note, even though I hadn't expected Cursor to just start making changes in the middle of planning, it did pick the best possible project to start with. SQLisHard.Core has no dependencies on other projects in the solution and it's small, a great first choice.

How it went: 🟢 The upgrade to the project and it's unit test project went smoothly. The two major upgrades were .Net project upgrades (simplifications to the structure, imports, etc.) and changes in how assertions worked in older vs newer versions of the NUnit unit testing library.

This is an easy sweet spot for AI Agents: Both of these sets of upgrades are widely documented online, the unit test changes are 100 small repetitive fixes, and both sets of changes have to work for the tests to return green.

Summarizing the task for the next agent: 🟢

After completing that task successfully, I asked the agent to produce a new prompt that summarized it's work and set the task for the next agent. I like to lock in gains and move to a fresh prompt regularly. After the context of a conversation grows past a certain point, LLMs begin to degrade.

Input:

Instead of running the same context, please create a prompt that summarizes the goals of what we are doing and the set of tasks or milestones an AI agent should perform so I can paste that into a fresh agent context to start again

Output

You are an AI pair programmer helping me modernize an old ASP.NET Framework web application called "SQLisHard" to .NET 9. The application is an interactive SQL learning tool.

Overall Goal: Modernize the application to use .NET 9 and update related technologies.

Current Task (In Scope): Upgrade the C# projects from .NET Framework (likely 4.x) to .NET 9. This includes converting project files to the SDK style, updating TargetFramework, migrating dependencies (from packages.config to PackageReference), and making necessary code changes (like handling System.Configuration removal). Frontend upgrades (JS, CSS, HTML), database migrations, and build/deployment automation are out of scope for this specific task.

Progress So Far:

- We successfully upgraded the

SQLisHard.Corelibrary project and its corresponding test projectSQLisHard.Core.Teststo .NET 9.- This involved converting their

.csprojfiles to the SDK style, updating dependencies (like NUnit, Moq, PetaPoco, addingMicrosoft.Extensions.Configuration.Abstractions), replacingSystem.Configurationusage withIConfigurationpatterns inPetaPoco.csandCoreMembership.cs, updating NUnit assertions (Assert.AreEqualtoAssert.That), and removing oldAssemblyInfo.csandpackages.configfiles.- We verified that

SQLisHard.Core.Testsbuilds successfully and all its tests pass on .NET 9.Constraints & Guidelines:

- Minimal Changes (YAGNI): Only make changes necessary for the .NET 9 upgrade. Avoid introducing new libraries or major refactoring unless essential for the upgrade.

- PetaPoco: Keep using PetaPoco for now. Do not replace it with another ORM at this stage.

- Logging: Do not introduce new logging frameworks yet.

- Nullability Warnings: Ignore C# nullability warnings (CS86xx) for now unless they cause build errors or runtime issues. We will address them later.

- Order: Upgrade library projects first, then the main web project. The planned order is:

SQLisHard.Core+SQLisHard.Core.Tests(DONE)SQLisHard.General+SQLisHard.General.Tests(NEXT)SQLisHard.Domain+SQLisHard.Domain.TestsSQLisHard(web) +SQLisHard.Tests+SQLisHard.IntegrationTestsNext Step: Your immediate task is to upgrade the

SQLisHard.Generalproject and its associated test projectSQLisHard.General.Teststo .NET 9, following the same process used forSQLisHard.Core:

- Analyze the existing

.csprojfiles andpackages.config.- Convert the

.csprojfiles to the SDK style, targetingnet9.0.- Update package dependencies to compatible .NET 9 versions using

<PackageReference>.- Identify and update any code using APIs incompatible with .NET 9 (especially

System.Configuration).- Remove

Properties/AssemblyInfo.csandpackages.config.- Ensure the projects build successfully using

dotnet build.- Update any test code (e.g., NUnit assertions) if necessary due to library version changes.

- Run the tests using

dotnet testand ensure they pass.

This new prompt includes a mix of instructions I originally gave it with instructions along the way, often due to correcting behavior (like the YAGNI one) or answering questions. It also builds in some of what it learned along the way, which may reduce the number of files the next agent has to open and explore to make decisions.

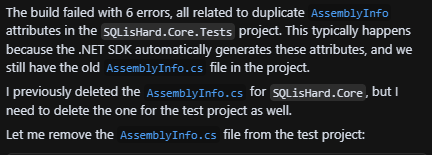

Two More Libraries updates 🟢/🔴/🟢

Git Commit: SQLisHard.General, Git Commit: SQLisHard.Domain

The next two libraries are a repeat. Start with the prompt the prior task created, watch it update .Net and NUnit, generate the next prompt.

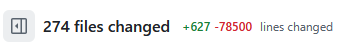

How it went: 🟢/🔴/🟢 Quick and clean. on the 2nd library, the AI spiralled out of control, adding new tests instead of fixing original tests and failing with a few updates until it couldn't dig itself out. Sometimes a git reset and slightly updating prompt gives it the fresh start it needs to not fall into a hole.

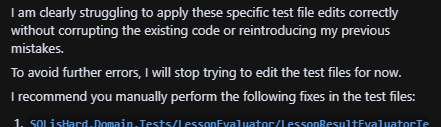

Note: This is the only time I have ever had an AI Agent fail at a set of edits so many times that it decided to give up and tell me to make the changes instead

Completely off the rails 🔴/🔴/🔴/⌨

Git Commit: SQLisHard web project

The web project was too much for the agent, between the number of unique artisinal choices and an unclear picture in my head of where I wanted it to go.

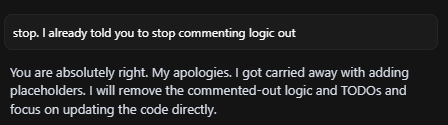

How it went: 🔴/🔴/🔴/⌨ I did the main web project upgrade by hand. The AI Agent attempted it 3 times, and went wildly off the rails 3 times. The AI struggled, picking a different rabbit hole to head down each time, before spiralling into wilder and wilder updates, to the point where it started simply commenting all of the logic out of every function with an error instead of fixing anything.

Problem #1: A lot of changes. The underlying approaches for the web endpoints had merged and evolved several times. Auth in ASP.Net tends to go through evolutions every couple levels. The startup for ASP.Net has evolved multiple times. ASP.Net went from "you can use DI" to "do it this way with ours". The standard JSON library swapped out with different implicit defaults. Individual libraries, for instance to bundle assets on build, didn't make the jump from .Net Framework to .Net Core. And so on.

Problem #2: Insufficient direction from me. Given the breadth of changes, I needed to provide a better picture then "just upgrade to .Net 9", but I don't have a mental image myself of how it's going to work.

Back to the AI to upgrade tests 🟢

Returning to familiar ground, with the website project updated we still need to update it's Unit Test project sibling.

How it went: 🟢 Very quick, theres only a small number of tests and the AI Agent was able to rework thinsg to match the manual work I had done on the web project.

And pair on UI Tests upgrade 🟡

I expected the UI test library to go smoothly, but it's also been a lot of years since I touched a Selenium-based project, much less SpecFlow.

How it went: 🟡 The AI Agent did a lot of work very quickly, but then got bogged down by a few things it wasn't imaginative to figure out on it's own (at least not this time, I've had other cases where they have managed to figure out similar problems). There was a configured assumption around what port the app would be running on. Another is the common Selenium/webdriver "you're using a version of the browser that is 1 version off from the client library" that wasn't failing with a usable error. A behavior change happened to one of the methods some time in the past 10 years that led to an error that most content on the internet claims is for a different issue then it was in this case.

I still gained a lot of productivity by having the AI Agent upgrade most of the code, which left me the gnarlier problems to figure out.

Final Notes

I've opened this project several times with the intent to start upgrading it, then closed it again and went back to a book, video games, or similar.

Using an AI Agent may not have 10x'd me, but it got me over the hump to start doing the work and it took at least half the work off my plate. I've manually done upgrades for .Net 1.1/2/3/3.5/4/4.5, Core 1/2/3, .Net 5/6/7 and I know this work skipped over a few that were extremely easy, as wel las a few that were very much not easy, and it was motivating to be able to quickly bounce back and forth and knock things out.