Agents on top of LLMs have completely undone my "should I automate this or not" gut feel.

Like most developers, I have a finely tuned feel for when a task is almost long enough to script, but not quite. When I decide to grind it out manually instead of writing somethign new.

While a lot of folks are still arguing over how much we can trust AI agents with coding, I think there's pretty broad agreemenet that short, greenfield tasks are extremely doable.

This not only changes the math on things I would have manually shut my brain off and done, but also is forcing me to be more aware of things I don't even bother starting that are suddenly super cheap.

I'm going to present two real world cases while investigating bugs in a video automation process and maybe it will give you some ideas.

One-off tools to find and fix a video timing bug

Recently we uncovered a subtle bug in the behavior of one of my most production critical services. A service that is core to our domain, processing 10s to 100s of videos through a variety of video & audio processing stages, was subtly adding a few milliseconds to each clip.

This is problematic because when we say, "bring the new music track in at full volume with the start of clip #71", what we don't want is for the new clip to come in loud 1/2s before clip #71 and stomp on someone who is speaking.

Investigation

Luckily, every one of the over 300 ffmpeg calls for this job are logged, along with diagnostics and decisions captured at every stage of the job. And I have a method to pull that entire job out of the cloud and run it on my local machine, removing multiple factors like OS, ffmpeg version and build details, codecs, etc from the equation, because it does the same exact thing on my local system.

And I have several hundred intermediary files from the process that I can recreate on demand, to dig in past the available logs.

The first step is to take all of the measurements from the logs and throw them into excel.

I did this manually in about 5 minutes, using my old gut feel for the timing to put a script together to parse these logs.

It hadn't occurred to me not to trust my gut yet.

I manually run ffprobe on 2-3 (of ~100) original clips, to extract the stream durations of each file in the progression from the original to final process file. Pasted into my excel sheet, this makes it immediately apparent the trim stage is introducing the biggest unexpected drift in durations.

And now I'm at a crossroads.

The root problem is happening in a single ffmpeg command. I measure the outcome (is the duration what I expected) with an ffprobe afterward to get the durations from the audio and video streams (you would be shocked how often the underlying audio and video streams do not match in just about every video you watch).

I could manually paste the two commands into a console and run it for 4-5 files, copy/pasting the resulting lengths back into excel when I have some equations and conditional formatting showing me where problems are, and a couple years ago this is exactly what my process would have been, followed by running a variety of very large jobs and sample the results for a few files within each job.

Mass examination of the fix

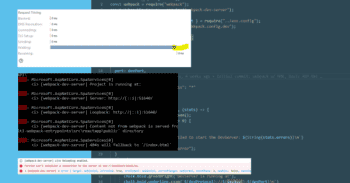

But, I have an AI agent now, and I have a temporary tools folder that I can use as a harness to run this process a lot more scientifically and in less time.

Create a new script named

src/testTrimMethods.tsthat is similar to @/src/convert.ts that is designed to test trimming a set of files using thetrimVideovsnewTrimVideomethods in @/src/render-server/ffmpeg.service.ts . Defined in the file should be a set of test cases (namedxinconvert.ts) and a number of iterations to run each test case. The test case should include a relative path to an input mp4, a start time, and a duration. During the test run, for each test case over the defined number of iterations, define two output paths for each trim method by prependingout/trim/to the input path name, and incorporating the trim function number and iteration as part of the filename before the file extension. Run the two trim functions to produce the output files.Run

getAudioDurationandgetVideoDurationfor each output file and capture the results, using a timer to capture the elapsed execution time of the function. At the end of the run, output a CSV with the follow columns/values: input path, iteration, target start, target duration, trimVideo duration, trimVideo elapsed, newTrimVideo duration, newTrimVideo elapsed.

A few moments later I have a test harness that not only lets me try multiple implementations of trim methods that can run against 10 differeht files automatically, with CSV output I can paste directly into my excel testing sheet.

Next, instead of running a few jobs and sampling, I tell the AI agent to add the contents of a local "Trouble" folder. These are a growing collection of video clips that have historically caused problems so I can make extra sure that this new method doesn't cause regressions from any of those past problems.

Finally, one last run through to make sure all the individual changes result in the fix I wanted when I jam 110 files through the render.

55 minutes, time for a new cup of coffee.

Prompting the LLM to find the next problem

The final result looks a lot better, but analyzing it in Premiere shows there's still a tiny bit of drift unaccounted for.

Remember that 5 minute manual excel work in the beginning? That was silly, and now I know it. This time I'll have the agent by scanning back through the intermediary files and pulling out the data I need:

write me a basic javascript script file

tools/examineVideoAudioDrift.jsthat accepts a starting file prefix, such as "2001" and a starting file index, such as "7659", and a number of files to examine, such as "20", and proceeds to loop to create 4 file paths (uploads/${prefix}-${index+i}-truncated.mp4,uploads/${prefix}-${index+i}-resized.mp4,uploads/${prefix}-${index+i}-final.mp4,uploads/${prefix}-${index+i}-final-transitioned.mp4), usesgetVideoDurationandgetAudioDurationfrom @/libraries\ffmpeg\ffmpeg.service.js to examine each file for the duration of the video and audio streams, and then outputs a CSV of:

- i

${prefix}-${index+i}- video duration of truncated

- audio duration of truncated

- video duration of resized

- audio duration of resized

- video duration of final

- audio duration of final

- video duration of final transitioned

- audio duration of final transitioned

Even with the misspelling, the AI Agent one shots this easy prompt and in one additional step I paste the CSV into excel and locate the few extra frames of drift that end up requiring a simple 4 character change in a later processing step.

Two Sentences to carefully fix a full harddrive

Enter the second problem.

Rerunning video production jobs on my local machine has (once again) filled my harddrive. Now for reasons specific to this system and what I'm working on, I don't want to delete original or final versions of files in this working folder. But if you see the numbering above, the "8317" is a counter for how many video files I've processed in this folder since some date back in 2022 (thus, running out of HDD space, again).

In a pre-AI world, my options would be:

- Write a quick command-line call, YOLO, live with the fallout of inevitably deleting files I didn't mean to and don't notice for 6 months

- Turn my brain off, spend an hour selectively deleting files (arrow, delete, enter, arrow, arrow, enter, delete, …)

- Spend 30 minutes to 2 hours writing a script to delete a subset of the files

I probably would have picked 2. But an AI intern that can write scripts cheap and quick means in one minute and $0.0925, I get the script for option 3 in the time of option 1.

write me a basic javascript file called

tools/deleteOldFiles.jsthat will examine the list of files in theuploadssubfolder that are older than 6 months, end in "-truncated.mp4" or "-resized.mp4" or "-normalized.mp4" or include the term "concatChunk" in the name, and tell the number of files and human readable disk size and prompt the user to ask if I want to delete them. If the user then presses "y", delete the list of files from the disk and exit. If the user presses any other key, exit without deleting the files.

This adds up. These are 3 scripts for one particular bug I was working on, and it lowered the time and cost in 3 small ways that led to me approaching the solution (and proving out the solution) from a different and better direction.

This changes the math

Whether or not we're willing to trust LLMs with our production code, there's a lot of benefit to be found in using it to quickly automate throwaway scripts day-to-day.

It's useful enough, that we should be consciously re-evaluating the defaults we've learned over time. "This takes longer to script then to do it", "This is probably a 15 minute task", etc. need to be re-evaluated to make sure that's still true.

I guarantee there's things you're doing every day where 1-2 minutes and $0.0925 will buy you back 10 minutes or more. Where "Write me a script to brute force this thing" isn't worth it in your time, but is worth it for a few minutes and $0.55 and may have you coming at problems completely differently than you would have in the past.