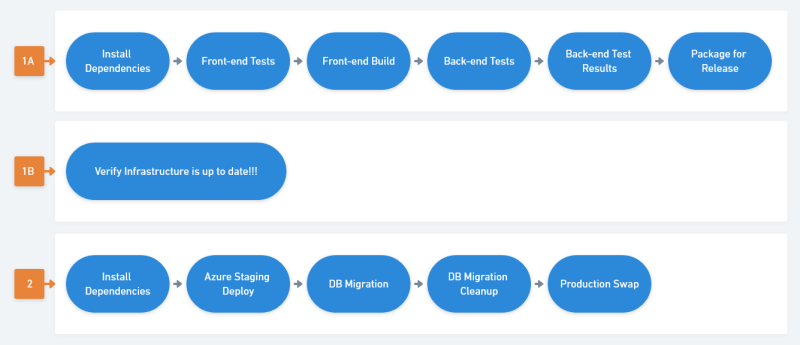

In Deploying an ASP.Net website to Azure via CircleCI I outlined a 2 stage pipeline to build and deploy an ASP.Net application to Azure. In this post, I'm adding a new stage to verify the infrastructure matches expectations in parallel to the CI stage that is testing the code and building deployable artifacts.

This post relies on the Terraform configuration outlined in the prior post, Terraform for an Azure Web App/SQL Stack.

This is configured as a parallel job for 3 reasons:

- Time: Running separate build jobs allows them to run in parallel and gives feedback (or delivery to production) faster.

- Flexibility: The infrastructure job can run against a different filtered branch set than the CI job.

- Narrower Docker Images: The original CI step uses an out-of-the-box Microsoft docker image, the infrastructure check needs an image with Terraform and Azure CLI. Separate jobs reduces complexity, we can use two tightly defined images instead of one much more complex one.

Rework the Pipeline for new Terraform Job

Starting with the pipeline Deploying an ASP.Net website to Azure via CircleCI, there's three main sets of changes:

- (All) Change out environment + infrastructure variables

- (Job 1B) Add the new build that performs the terraform plan check

- (Job 2) Apply the new details as a connection string in the deployed app settings

The final CircleCI config is located here (github): .circleci/config.yml

Configuration Updates

For the configuration, there are two sets of variables:

- variables that will no longer be hardcoded and will instead be sourced from the terraform state

- variables necessary as inputs for the terraform plan

This is also a good opportunity to standardize the naming some, since I had mixed casing and styles in the earlier pipeline.

Here's what I end up with for CircleCI env vars:

AZURE_SP_*- the returned values from creating the Azure Service Principal (example)AZURE_SP_APPID- appId valueAZURE_SP_NAME- name (not displayName, starts with http://)AZURE_SP_PASSWORD- generated password value

AZURE_SUBSCRIPTION_ID- subscription id for the azure resources and Service PrincipalAZURE_TENANT- tenant id, visible in the Service Provider outputDISCORD_STATUS_WEBHOOK- the value provided by Discord after creating a webhookSQL_ADMIN_LOGIN- username used in the terraform config for the SQL adminSQL_ADMIN_PASSWORD- password used in the terraform config for the SQL adminSQL_APP_NAME- a user with limited access that will be used by the web app to access the DBSQL_APP_PASSWORD- the password for that userSQL_THREAT_EMAIL- the email address used in the terraform config for threat email notifications

Some of these are direct updates of existing values, some will be referenced by the next set of changes. The biggest change is removing several values from the project settings/environment variables and sourcing them from the terraform state instead.

Terraform Verification Job (Job 1B)

The goal of the new job is to verify the terraform configuration still matches the deployed infrastructure. If the real infrastructure doesn't match, we fail the build until someone updates the infrastructure and retries.

The terraform job will need to:

- Map the generic environment variables to the ones it expects to have for the Azure Auth

- Run

terraform planwith the matching secrets the infrastructure was deployed with - Detect if there are changes in that plan

- Capture outputs for the next step: Resource Group Name, App Name, Staging URL, SQL Server and Database names

It also needs an image that includes both Terraform and Azure CLI, in this case I'm using zenika/terraform-azure-cli (github | dockerhub) (again with the ⚠⚠⚠ warning at the top).

So this is the new job in the circleci config:

terraform-plan:

docker:

- image: zenika/terraform-azure-cli:latest

steps:

- checkout

- run:

name: prepare terraform env vars

command: |

echo 'export ARM_SUBSCRIPTION_ID="${AZURE_SUBSCRIPTION_ID}"' >> $BASH_ENV

echo 'export ARM_TENANT_ID="${AZURE_TENANT}"' >> $BASH_ENV

echo 'export ARM_CLIENT_ID="${AZURE_SP_APPID}"' >> $BASH_ENV

echo 'export ARM_CLIENT_SECRET="${AZURE_SP_PASSWORD}"' >> $BASH_ENV

source $BASH_ENV

- run:

name: terraform plan

command: |

cd infrastructure

terraform init

terraform plan -out=tfplan -var "sql-login=$SQL_ADMIN_LOGIN" -var "sql-password=$SQL_ADMIN_PASSWORD" -var "sql-threat-email=$SQL_THREAT_EMAIL"

echo "Checking status to continue..."

count=$(terraform show tfplan | grep -c "Your infrastructure matches the configuration") || true

if [ $count -gt 0 ]; then

echo "No changes, infrastructure is up to date";

else

echo "******************************************************************"

echo "* Terraform plan requires changes to be applied, cannot continue *";

echo "* Apply terraform changes before retrying this job *";

echo "******************************************************************"

exit 1

fi

echo $(terraform output resource_group_name) > ../tf-resource_group_name

echo $(terraform output app_name) > ../tf-app_name

echo $(terraform output app_staging_url) > ../tf-app_staging_url

echo $(terraform output db_server_name) > ../tf-db_server_name

echo $(terraform output db_database_name) > ../tf-db_database_name

- persist_to_workspace: # store the built files into the workspace for other jobs.

root: ./

paths:

- "tf-*"- The first step maps the project settings from CircleCI to the specific environment variables that Terraform/Azure CLI expects to have available.

- Next the plan is generated to an output file

- The script verifies the presence of the string "Your infrastructure matches the configuration"

- It outputs 4 terraform outputs to respective files, which are then persisted at the end of the job for later access

Use Terraform Outputs in Deploy (Job 2)

The last step is to use those new variables in the deploy. The build already attaches the persisted workspace to access the artifacts from the first job (app, migration tool, healthcheck script), we add a step to extract the new values from the new tf-* files and add them as ENV vars:

- run:

name: import terraform outputs

command: |

cd /tmp/workspace

resource_group_name=$(cat tf-resource_group_name)

app_name=$(cat tf-app_name)

app_staging_url=$(cat tf-app_staging_url)

db_server_name=$(cat tf-db_server_name)

db_database_name=$(cat tf-db_database_name)

echo "export AZURE_RG_NAME=$resource_group_name" >> $BASH_ENV

echo "export AZURE_APP_NAME=$app_name" >> $BASH_ENV

echo "export AZURE_APP_STAGING_URL=$app_staging_url" >> $BASH_ENV

echo "export DB_SERVER_NAME=$db_server_name" >> $BASH_ENV

echo "export DB_DATABASE_NAME=$db_database_name" >> $BASH_ENV

source $BASH_ENVAll of the references in the later steps are updated to the new variable names from terraform and the updated naming of the circleci project settings.

One last (temporary) addition is to add a shimmed configuration management section during the Staging Deploy step. This adds adds a ConnectionString using settings from terraform and the new APP user/password in the CircleCI Config:

# TEMP - add/update configs - this should be handled by a config mgmt service or re-usable script tied to config mgmt

app_conn_string="Server=tcp:${DB_SERVER_NAME}.database.windows.net,1433;Initial Catalog=${DB_DATABASE_NAME};Persist Security Info=False;User ID=${SQL_APP_NAME};Password=${SQL_APP_PASSWORD};MultipleActiveResultSets=False;Encrypt=True;TrustServerCertificate=False;Connection Timeout=30;"

az webapp config connection-string set -t SQLAzure -g ${AZURE_RG_NAME} -n ${AZURE_APP_NAME} -s staging --settings Database="${app_conn_string}"And there we go, a copy of the earlier pipeline that now has a gate on the terraform state, uses those values for the deploy, and has a shim that we can replace to start introducing configuration management during the deploy (assuming I go that direction with this one).

Putting it all together

Below is the final CircleCI config for the 3 jobs (/.circleci/config.yml). The first job (1A: build-application) is untouched, the other two reflect the changes above and a couple other small changes.

version: 2.1

orbs:

discord: antonioned/discord@0.1.0

jobs:

build-application:

docker:

- image: mcr.microsoft.com/dotnet/sdk:5.0-buster-slim

environment:

VERSION_NUMBER: 0.0.0.<< pipeline.number >>

JEST_JUNIT_OUTPUT_DIR: "../../../reports/"

JEST_JUNIT_OUTPUT_NAME: "frontend.xml"

JEST_JUNIT_ANCESTOR_SEPARATOR: " > "

JEST_JUNIT_SUITE_NAME: "{filename}"

JEST_JUNIT_CLASSNAME: "{classname}"

JEST_JUNIT_TITLE: "{classname} > {title}"

steps:

- checkout

- run:

name: Install Build/System Dependencies

command: |

apt-get update -yq \

&& apt-get install curl gnupg -yq \

&& curl -sL https://deb.nodesource.com/setup_14.x | bash \

&& apt-get install nodejs -yq

curl -sS https://dl.yarnpkg.com/debian/pubkey.gpg | apt-key add -

echo "deb https://dl.yarnpkg.com/debian/ stable main" | tee /etc/apt/sources.list.d/yarn.list

apt update && apt install yarn -yq

apt install zip -yq

- run:

name: Front-end Test

command: |

cd frontend/react-parcel-ts

yarn install

yarn run ci:lint --output-file ~/reports/eslint.xml

yarn run ci:test

ls

- run:

name: Front-end Build

command: |

cd frontend/react-parcel-ts

yarn run build

- run:

name: Back-end Test

command: |

cd backend

cp ./ELA.App.Tests/appsettings.none.json ./ELA.App.Tests/appsettings.json

dotnet test --filter "TestCategory!=Database-Tests" -l trx -l console -v m -r ~/reports/

- run:

name: Back-end Test Results

when: always

command: |

dotnet tool install -g trx2junit

export PATH="$PATH:/root/.dotnet/tools"

trx2junit ~/reports/*.trx

- store_test_results:

path: ~/reports

- store_artifacts:

path: ~/reports

- run:

name: Build for Release

command: |

cd backend

dotnet publish ./ELA.App/ELA.App.csproj -c Release /property:Version=$VERSION_NUMBER -o ../app-publish --runtime win-x64

dotnet publish ./ELA.Tools.DatabaseMigration/ELA.Tools.DatabaseMigration.csproj -c Release /property:Version=$VERSION_NUMBER -o ../app-migrate --self-contained -r linux-musl-x64

cd ../app-publish

zip -r ../app-publish.zip *

cd ../app-migrate

chmod 755 ELA.Tools.DatabaseMigration

- persist_to_workspace: # store the built files into the workspace for other jobs.

root: ./

paths:

- app-publish.zip

- app-migrate

- tools/PollHealthcheck

- discord/status:

fail_only: true

failure_message: "Application: **$CIRCLE_JOB** job has failed!"

webhook: "${DISCORD_STATUS_WEBHOOK}"

mentions: "@employees"

terraform-plan:

docker:

- image: zenika/terraform-azure-cli:latest

steps:

- checkout

- run:

name: prepare terraform env vars

command: |

echo 'export ARM_SUBSCRIPTION_ID="${AZURE_SUBSCRIPTION_ID}"' >> $BASH_ENV

echo 'export ARM_TENANT_ID="${AZURE_TENANT}"' >> $BASH_ENV

echo 'export ARM_CLIENT_ID="${AZURE_SP_APPID}"' >> $BASH_ENV

echo 'export ARM_CLIENT_SECRET="${AZURE_SP_PASSWORD}"' >> $BASH_ENV

source $BASH_ENV

- run:

name: terraform plan

command: |

cd infrastructure

terraform init

terraform plan -out=tfplan -var "sql-login=$SQL_ADMIN_LOGIN" -var "sql-password=$SQL_ADMIN_PASSWORD" -var "sql-threat-email=$SQL_THREAT_EMAIL"

echo "Checking status to continue..."

count=$(terraform show tfplan | grep -c "Your infrastructure matches the configuration") || true

if [ $count -gt 0 ]; then

echo "No changes, infrastructure is up to date";

else

echo "******************************************************************"

echo "* Terraform plan requires changes to be applied, cannot continue *";

echo "* Apply terraform changes before retrying this job *";

echo "******************************************************************"

exit 1

fi

echo $(terraform output resource_group_name) > ../tf-resource_group_name

echo $(terraform output app_name) > ../tf-app_name

echo $(terraform output app_staging_url) > ../tf-app_staging_url

echo $(terraform output db_server_name) > ../tf-db_server_name

echo $(terraform output db_database_name) > ../tf-db_database_name

- persist_to_workspace: # store the built files into the workspace for other jobs.

root: ./

paths:

- "tf-*"

# - discord/status:

# fail_only: true

# failure_message: "Application: **$CIRCLE_JOB** job has failed!"

# webhook: "${DISCORD_STATUS_WEBHOOK}"

# mentions: "@employees"

deploy-application:

docker:

- image: mcr.microsoft.com/azure-cli:latest

environment:

VERSION_NUMBER: 0.0.0.<< pipeline.number >>

steps:

- attach_workspace:

at: /tmp/workspace

- run:

name: import terraform outputs

command: |

cd /tmp/workspace

resource_group_name=$(cat tf-resource_group_name)

app_name=$(cat tf-app_name)

app_staging_url=$(cat tf-app_staging_url)

db_server_name=$(cat tf-db_server_name)

db_database_name=$(cat tf-db_database_name)

echo "export AZURE_RG_NAME=$resource_group_name" >> $BASH_ENV

echo "export AZURE_APP_NAME=$app_name" >> $BASH_ENV

echo "export AZURE_APP_STAGING_URL=$app_staging_url" >> $BASH_ENV

echo "export DB_SERVER_NAME=$db_server_name" >> $BASH_ENV

echo "export DB_DATABASE_NAME=$db_database_name" >> $BASH_ENV

source $BASH_ENV

- run:

name: Install Dependencies

command: |

# dependencies to run dotnet db migration tool

apk add libc6-compat

ln -s /lib/libc.musl-x86_64.so.1 /lib/ld-linux-x86-64.so.2

# dependencies for monitoring health checkout

apk add nodejs

- run:

name: Capture public IP

command: |

public_ip=$(curl -s https://api.ipify.org)

echo "export PUBLIC_IP=$public_ip" >> $BASH_ENV

source $BASH_ENV

- run:

name: Azure Staging Deploy

command: |

cd /tmp/workspace

ls -l

# Deploy to staging slot

az login --service-principal -u ${AZURE_SP_NAME} -p ${AZURE_SP_PASSWORD} --tenant ${AZURE_TENANT}

echo "az webapp deployment source config-zip -g ${AZURE_RG_NAME} -n ${AZURE_APP_NAME} -s staging --src app-publish.zip"

az webapp deployment source config-zip -g ${AZURE_RG_NAME} -n ${AZURE_APP_NAME} -s staging --src app-publish.zip

echo "az webapp start -g ${AZURE_RG_NAME} -n ${AZURE_APP_NAME} -s staging"

az webapp start -g ${AZURE_RG_NAME} -n ${AZURE_APP_NAME} -s staging

# TEMP - add/update configs - this should be handled by a config mgmt service or re-usable script tied to config mgmt

app_conn_string="Server=tcp:${DB_SERVER_NAME}.database.windows.net,1433;Initial Catalog=${DB_DATABASE_NAME};Persist Security Info=False;User ID=${SQL_APP_NAME};Password=${SQL_APP_PASSWORD};MultipleActiveResultSets=False;Encrypt=True;TrustServerCertificate=False;Connection Timeout=30;"

az webapp config connection-string set -t SQLAzure -g ${AZURE_RG_NAME} -n ${AZURE_APP_NAME} -s staging --settings Database="${app_conn_string}"

# Poll health endpoint, 500ms intervals, 15s timeout

echo "Running healthcheck poll: https://${AZURE_APP_STAGING_URL}/health"

cd /tmp/workspace/tools/PollHealthcheck

node index.js https://${AZURE_APP_STAGING_URL}/health 500 15000

- run:

name: Database Migration

command: |

echo "Adding firewall rule for: ${PUBLIC_IP}"

az login --service-principal -u ${AZURE_SP_NAME} -p ${AZURE_SP_PASSWORD} --tenant ${AZURE_TENANT}

az sql server firewall-rule create --subscription ${AZURE_SUBSCRIPTION_ID} -s ${DB_SERVER_NAME} -g ${AZURE_RG_NAME} -n CircleCI-Job-$CIRCLE_JOB --start-ip-address $PUBLIC_IP --end-ip-address $PUBLIC_IP

cd /tmp/workspace/app-migrate

echo "Running migration..."

conn_string="Server=tcp:${DB_SERVER_NAME}.database.windows.net,1433;Initial Catalog=${DB_DATABASE_NAME};Persist Security Info=False;User ID=${SQL_ADMIN_LOGIN};Password=${SQL_ADMIN_PASSWORD};MultipleActiveResultSets=False;Encrypt=True;TrustServerCertificate=False;Connection Timeout=30;"

./ELA.Tools.DatabaseMigration --ConnectionString "${conn_string}"

- run:

name: Database Migration - Firewall Cleanup

command: |

echo "Removing firewall rule for: ${PUBLIC_IP}"

az login --service-principal -u ${AZURE_SP_NAME} -p ${AZURE_SP_PASSWORD} --tenant ${AZURE_TENANT}

az sql server firewall-rule delete --subscription ${AZURE_SUBSCRIPTION_ID} -s ${DB_SERVER_NAME} -g ${AZURE_RG_NAME} -n CircleCI-Job-$CIRCLE_JOB

when: always

- run:

name: Azure Production Swap

command: |

# Poll health endpoint, 500ms intervals, 15s timeout

echo "Running healthcheck poll: https://${AZURE_APP_STAGING_URL}/health"

cd /tmp/workspace/tools/PollHealthcheck

node index.js https://${AZURE_APP_STAGING_URL}/health 500 15000

# Swap into production

echo "Performing swap..."

az login --service-principal -u ${AZURE_SP_NAME} -p ${AZURE_SP_PASSWORD} --tenant ${AZURE_TENANT}

az webapp deployment slot swap -g ${AZURE_RG_NAME} -n ${AZURE_APP_NAME} -s staging --target-slot production

echo "Swap complete."

- run:

name: Shutdown staging slot

command: |

# Shutdown old version

echo "Shutting down old version..."

az webapp stop -g ${AZURE_RG_NAME} -n ${AZURE_APP_NAME} -s staging

when: always

- discord/status:

fail_only: true

failure_message: "Application: **$CIRCLE_JOB** deploy has failed!"

webhook: "${DISCORD_STATUS_WEBHOOK}"

mentions: "@employees"

- discord/status:

success_only: true

success_message: "Application: **$CIRCLE_JOB** deployed successfully: $VERSION_NUMBER"

webhook: "${DISCORD_STATUS_WEBHOOK}"

workflows:

build_and_deploy:

jobs:

- build-application

- terraform-plan:

filters:

branches:

only: main

- deploy-application:

requires:

- build-application

- terraform-plan

filters:

branches:

only: mainRunning the Builds the First Time

One thing to note, if you decide to fork this and run it yourself (I'll add instructions to the project readme for that) is that there are some manual steps to an initial deploy. The issue is that we deploy the updated web application, then verify the health checks before committing and moving to the database migration.

This works well day-to-day, it reduces the amount of change we apply to production if something is terribly wrong with the application and we only just caught it (like a missing config for a new resource). But for the very first deploy, the database is completely uninitialized and the health check will fail.

Typically how I handle this is brute force. I comment out that initial heathcheck, run the build (which will now also apply the migrations, but then fail the later healthcheck), then uncomment and run it a second time. This primes everything and gets an initial, good produciton deploy at the cost of two iffy early commits.